Browser fingerprinting attack and defense with PhantomJS

PhantomJS is a headless browser that when you use Selenium, turns into a powerful, scriptable tool for scraping or automated web testing in even JavaScript heavy applications. We’ve known that browsers are being fingerprinted and used for identifying individual visits on a website for a long time. This technology is a common feature of your web analytics tools. They want to know as much as possible about their users so why not collect identifying information.

Attack (or active defense)

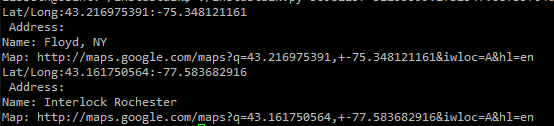

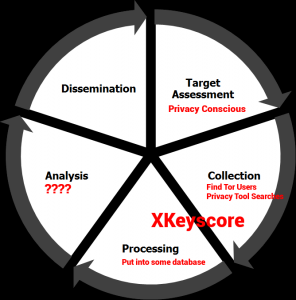

The scenario here is you, as a privacy conscious Internet user, have taken the various steps to hide your IP, maybe using Tor or a VPN service, and you’ve changed the default UserAgent string in your browser but by using your browser and visiting similar pages across different IP’s, the web site can track your activities even when your IP changes. Say for instance you go on Reddit and you have your same 5 subreddits that you look at. You switch IP’s all the time but because your browser is so individualistic, they can track your visits across multiple sessions.

Lots of interesting companies are jumping on this not only for web analytics, but from the security point of view. The now Juniper owned company, Mykonos, built it’s business around this idea. It would fingerprint individual users, and if one of them launched an attack, they’d be able to track them across multiple sessions or IP’s by fingerprinting those browsers. They call this an active defense tactic because they are actively collecting information about you and defending the web application.

The best proof-of-concepts I know of are BrowserSpy.dk and the EFF’s Panopticlick project. These sites show what kind of passive information can be collected from your browser and used to connect you to an individual browsing session.

Defense

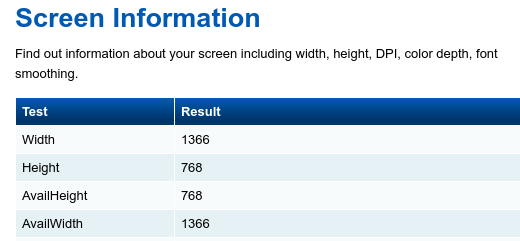

The defense to these fingerprinting attacks are in a lot of cases to disable JavaScript. But as the Tor Project accepts, disabling JavaScript in itself is a fingerprintable property. The Tor Browser has been working on this problem for years; it’s a difficult game. If you look through BrowserSpy’s library of examples, there are common and tough to fight POC’s. One is to read the fonts installed on your computer. If you’ve ever installed that custom cute font, it suddenly makes your browser exponentially more identifiable. One of my favorites is the screen resolution; This doesn’t refer to window size which is separate, this means the resolution of your monitor or screen. Unfortunately, in the standard browser there’s no way to control this beyond running your system as a different resolution. You might say this isn’t that big of a deal because you’re running at 1980×1080 but think about mobile devices which have model-specific resolutions that could tell an attacker the exact make and model of your phone.

PhantomJS

There’s no fix. But like all fix-less things, it’s fun to at least try. I used PhantomJS in the past for automating interactions to web applications. You can write scripts for Selenium to automate all kinds of stuff like visiting a web page, clicking a button, and taking a screenshot of the result. Security Bods (as they’re calling them now) have been using it for years.

To create a simple web page screen scraper , it’s as easy as a few lines of Python. This ends up being pretty nice especially when your friends send you all kinds of malicious stuff to see if you’ll click it. 🙂 This is very simple in Selenium but I wanted to attempt to not look so script-y. The example below is how you would change the useragent string using Selenium:

|

1 2 3 4 5 6 7 8 9 |

ua = 'Mozilla/5.0 (Windows NT 6.1; rv:31.0) Gecko/20100101 Firefox/31.0' dc = dict(DesiredCapabilities.PHANTOMJS) dc["phantomjs.page.settings.userAgent"] = ua browser = webdriver.PhantomJS( "phantomjs", service_args=self.proxysettings, desired_capabilities=dc ) browser.get("") |

Playing around with this started bring up questions like: Since PhantomJS doesn’t in fact have a screen, what would my screen resolution be? The answer is 1024×768.

This arbitrarily assigned value is pretty great. That means we can replace this value with something else. It should be noted that even though you set this value to something different, it doesn’t affect the size of your window. To defend against being “Actively Defended” against, you can change the PhantomJS code and recompile.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

PhantomIntegration::PhantomIntegration() { PhantomScreen *mPrimaryScreen = new PhantomScreen(); // Simulate typical desktop screen int widths [5] = { 1024, 1920, 1366, 1280, 1600 }; int heights [5] = { 768, 1080, 768, 1024, 900}; int ranres = rand() % 5 + 1; int width = widths[ranres]; int height = heights[ranres]; int dpi = 72; qreal physicalWidth = width * 25.4 / dpi; qreal physicalHeight = height * 25.4 / dpi; mPrimaryScreen->mGeometry = QRect(0, 0, width, height); mPrimaryScreen->mPhysicalSize = QSizeF(physicalWidth, physicalHeight); mPrimaryScreen->mDepth = 32; mPrimaryScreen->mFormat = QImage::Format_ARGB32_Premultiplied; |

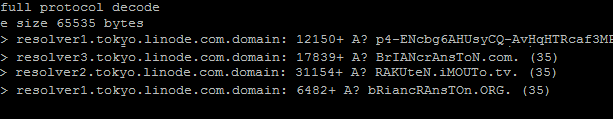

This will take a few extra screen resolutions every time a new webdriver browser is created. You can test it back at BrowserSpy.

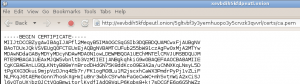

Old:

And so on…

And we’ve now spoofed a single fingerprintable value only another few thousand to go. In the end, is this better than scripting something like Firefox? Unknown. But the offer still stands that if someone at Juniper wants to provide me with a demo, I’d provide free feedback on how well it stands up to edge cases like me.

![52164288[1]](/wp-content/uploads/2014/07/521642881-300x225.jpg)

![52164332[1]](/wp-content/uploads/2014/07/521643321-300x225.jpg)

![liberte-logo-600px[1]](/wp-content/uploads/2013/11/liberte-logo-600px1.png)